It is well known that selecting mutual funds from thousands is a tough job. If an investor were to left all to herself to pick one of them, it would be a journey through hell.

However, there are several good people out there working to make life easier for the investor.

One of the solutions offered for this purpose is the star rating or the rankings.

Companies like ValueResearch and Morningstar provide ratings, while someone like CRISIL do rankings. (For those who are not aware, CRISIL is primarily a credit rating company and provides several information products to the financial services industry.)

If you have been using sites like Moneycontrol, you would have already noticed the CRISIL MF ranking mentioned for the funds.

Similarly, many media publications and fund houses or distributors and advisors use one or more of these as a part of their investor communication.

Investors too consider these ratings and rankings as ‘investing signals’.

Now, I have written in the past that relying on only the star ratings and rankings is not right for your mutual fund investments.

Please note that the ratings and rankings are only filters, a shortlist to enable you to identify and dig further.

Having said that, let’s go a little deeper and understand the CRISIL MF ranking.

Decoding CRISIL Fund ranking

Unlike the star ratings method, which use only returns or risk adjusted returns, CRISIL’s ranking method goes beyond in its assessment.

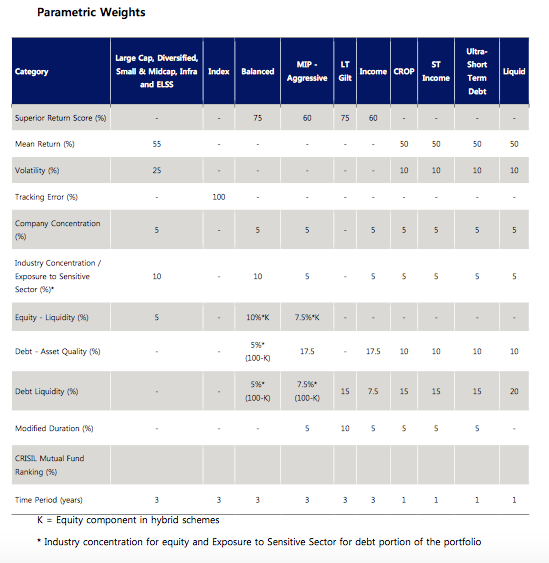

Have a look at the image below. This is a list of all the parameters and weightage used by CRISIL for its fund rankings.

Source. CROP stands for Credit Opportunities Fund.

CRISIL also uses portfolio parameters such as industry or sector concentration as well as top holdings concentration along with liquidity to determine the rank for the funds it uses.

In case of debt funds, asset quality (overall credit rating of the investments) as well as modified duration (price sensitivity of the portfolio to change in interest rates) also is taken into account.

For pure equity funds, past returns, as is, are used.

In case of hybrid funds (mix of equity and debt), the performance is driven by both the assets and hence, it calculates a superior return score (SRS), based on its own methodology.

Coming to the weightage, past performance is the BHEEM of the group. It gets a high weightage (more than half). The other parameters get an allocation from the rest.

The point clearly made here is that if you have not performed, you are not good.

Eligibility Criteria

Let’s now see the criteria that determine if a particular fund or category will be ranked or not.

CRISIL uses some of the common filters here. Some of the most relevant ones are:

- Only open ended funds are considered

- The fund needs to have a minimum NAV history of 3 years for equity, hybrid and long term debt schemes such as gilt funds; 1 year NAV history for all other debt funds.

- Schemes which have an Average Quarterly AUM of 98 percentile of the category only will be considered. Schemes that are too small in size are filtered out.

- The scheme should have made portfolio disclosure for all the last 3 months of the quarter. So, even if it is a large, old scheme, it could disqualify on this criteria alone.

- There have to minimum 5 schemes in the category for it to be considered for ranking.

So, CRISIL applies the eligibility criteria to shortlist the fund schemes and then further the parameters and weight on these schemes to arrive at its final rankings.

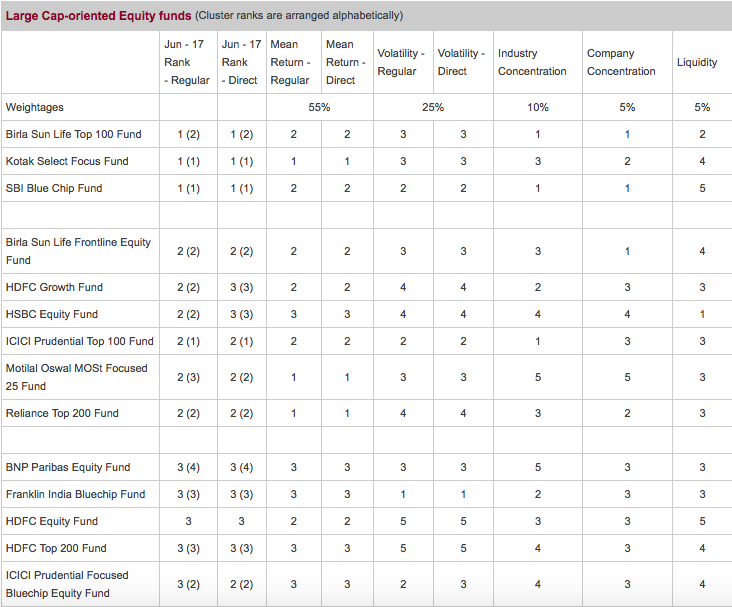

A CRISIL fund ranking table for Large cap oriented equity funds looks like this:

Note: A rank works quite opposite to a star rating. A star rating of 5 is very good, but no fund wants a rank 5. A rank 1 and a 5 star rating are equivalent.

Talk about confusing!

So CRISIL does the ranking for the following categories:

- Diversified Equity funds

- Small and Mid-cap Equity funds

- Thematic – Infrastructure funds

- Equity Linked Savings Scheme (ELSS)

- Index funds

- Balanced funds

- Monthly Income Plan – Aggressive

- Long Term Gilt funds

- Income funds

- Short Term Income funds

- Credit Opportunities funds

- Ultra Short-term Debt funds

- Liquid funds

So far so good.

Now, I have 2 issues with CRISIL fund ranking.

#1 Incorrect categorisation

HDFC Equity Fund and UTI Bluechip Flexicap are categorised as large cap when in fact, they are multicaps or flexicaps. The fund documents say so.

Quantum Long Term Equity Fund is categorised as Diversified Equity while actually it is predominantly large cap.

HDFC Midcap opportunities is categories as mid cap when in fact its size rivals many large cap or flexi cap funds. It is ideally a flexicap. (Here is my another note on HDFC Midcap)

While CRISIL has its own definition to categorise fund, it doesn’t seem quite right.

Just because a multicap fund has bigger exposure to large cap stocks (>75%) does not mean it can be called a large cap fund. In fact a big sized multi cap fund is likely to have a higher exposure to large cap stocks.

Similarly, its mid and small cap definition is something we can’t come to terms with. It says if the fund has <45% exposure to large caps. That’s quite high for a midcap/small cap fund.

The incorrect categorisation can lead to comparison of apples with oranges.

CRISIL can and should pay more attention to this aspect to fine tune the categorisation. In my view, most categories are clearly indicated by the fund houses themselves in the respective scheme related documents.

Looking at the fund benchmark is also a good way to understand the fund category. If a fund such as HDFC Equity, has Nifty 500 as the benchmark, then it cannot be anything but a multicap fund.

#2 Skewed weightage to performance

The biggest issue for me is that for equity funds, in 90% of the cases, the final rank is equal to the the performance rank of the fund.

Of course, this is because the performance (mean return) gets 55% of the weightage. In case of balanced (hybrid) funds, the returns have a 75% weightage. Gosh!

To my mind, this is incorrect. The best way to resolve this issue is to give equal weightage to returns and volatility.

Interestingly, CRISIL does it for its debt funds. See the parameter and weightage table again. Mean returns for debt funds (except Gilt and Income funds) have a 50% weightage.

And it clearly reflects in the debt fund rankings too. Most composite or final ranks for debt funds are different from their performance ranks.

CRISIL should make this one consistent for all categories – a 50% weightage to returns. (Personally, I would give it much less weight.)

CRISIL Fund Ranking – Take it with a pinch of salt!

As cautioned before too, CRISIL fund ranking is a just a computation process. The data is input and the ranks are output. As a first level filter, it can work great.

However, as with any such system, the quality of your data and the process can result in different output. The different weightage to returns is one such process issue.

Also note that the rankings do not take into account the respective fund strategy, investment focus, etc. A fund which has a mandate to hold only 20 or 30 stocks in its portfolio, will rank low on concentration parameters.

If a fund has mandate to hold cash, based on its assessment of the market, that fund will also see a lower ranking in certain periods only because the weightage to the returns parameter is highest.

So, while CRISIL fund ranking is a reference point or filter, as an investor, you need to dig deeper into the funds before you take your final investment call.

How do you interpret and use the CRISIL fund ranking? What other measures do you rely on to select your funds? Do share your views.

Leave a Reply